Google’s DolphinGemma AI Decodes Dolphin Sounds

MOUNTAIN VIEW, Calif. Google launched its DolphinGemma AI on April 14, 2025, to decode dolphin vocalizations.

Announced on National Dolphin Day, the AI aims to analyze clicks, whistles, and pulses of Atlantic spotted dolphins. Developed with Georgia Tech and the Wild Dolphin Project, DolphinGemma AI seeks to uncover patterns in dolphin communication, potentially enabling human-dolphin interaction. The launch highlights Google’s push into AI-driven interspecies research.

DolphinGemma AI’s Breakthrough

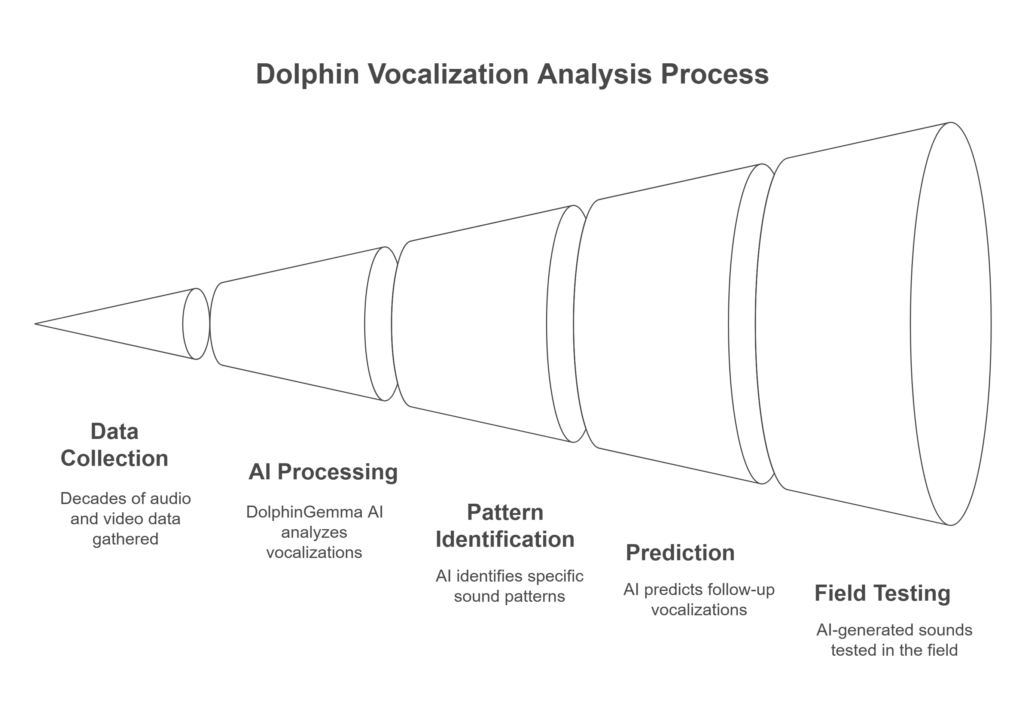

Google’s DolphinGemma AI, a 400-million-parameter model, processes dolphin vocalizations using the SoundStream tokenizer. Built on Google’s Gemma framework this tool runs particularly on Pixel phones for some really real time analysis in the field. Since 1985 we’ve been studying wild dolphins in the Bahamas and we’ve spent decades collecting all kinds of audio and video data to understand them better. We’ve been doing extensive research and have amassed lots and lots of footage and sounds like songs, dances and their interactions to better understand dolphins. DolphinGemma AI identifies sound patterns, predicting likely follow-up vocalizations, much like language models predict human speech.

The model looks at special calls that dolphins use as their names as well as bursts of sounds that correspond to specific actions like spawning season romance. “DolphinGemma AI spots subtleties humans miss, said Dr. Denise Herzing, Wild Dolphin Project founder. By generating dolphin-like sounds, the AI could help researchers test responses, advancing the quest to understand if dolphins have a language-like system. Field tests begin this summer, using Pixel 9 phones for enhanced processing.

Two-Way Communication Efforts

DolphinGemma AI works with the Cetacean Hearing Augmentation Telemetry (CHAT) system, a tool for two-way interaction. CHAT, running on Pixel phones, plays synthetic whistles linked to objects like seaweed or scarves. When dolphins mimic these sounds, CHAT alerts researchers, building a shared vocabulary. We’ve been working on this approach since 2012 and its main goal is to create communication that’s really simple and close to both humans and dolphins.

With friendly research that barely touches these animals at all, the project called the wild dolphins find out which sounds are used to reunite mothers and their babies. DolphinGemma AI really accelerates things though—it now flags patterns that previously would have taken years to catch. Google’s use of Pixel phones reduces the need for costly hardware, making the technology accessible for marine researchers. The AI’s compact size ensures it works efficiently in ocean fieldwork.

Broader Context and Challenges

Dolphins are among Earth’s smartest creatures, known for cooperation and self-recognition. Scientists have long been studying the complexity of their vocalizations and they’re hoping to figure them out. The Wild Dolphin Project’s dataset, covering generations of dolphins, offers unique insights. Using this, DolphinGemma AI explores whether dolphin vocalizations actually constitute a structured language. Still up in the air, a big unanswered question.

Other teams are working on studying communication of animals and they use artificial intelligence. For example, they research things like talking to crows or whales. Noise pollution from big ships put dolphins in danger because they count on sound for orienting and hunting. DolphinGemma AI could aid conservation by revealing how noise disrupts communication. However, adapting the model for other species, like bottlenose dolphins, may require fine-tuning, as vocalizations vary.

Open Model and Future Plans

Google plans to release DolphinGemma AI as an open model this summer, inviting global researchers to use it. Sure, working off dolphins from the Atlantic waters, with some tweaks an AI should be able to dive into other kinds of cetaceans pretty smoothly too. This open approach aims to speed up discoveries in marine biology. Researchers hope DolphinGemma AI will uncover hidden structures in dolphin sounds, bringing interspecies communication closer.

Starting in the summer of 2025, this model gets deployed in the real world. We’ll be testing out what difference it actually makes. Upgrades to Pixel 9 phones will improve CHAT’s interactivity, allowing faster responses to dolphin mimics. Google making a move by partnering with Georgia Tech and the Wild Dolphin Project sure shines a light on a way that exciting AI dovetails with the real world. It’s a bright example and could light a spark for others doing animal research too.

Conclusion and Next Steps

Google’s DolphinGemma AI marks a bold step in decoding dolphin vocalizations, with implications for science and conservation. If successful, it could reveal whether dolphins communicate with language-like complexity, reshaping our view of animal intelligence. The open model release will expand its reach, enabling researchers to explore new cetacean species and refine the AI’s capabilities.

The summer 2025 field season will be critical, as DolphinGemma AI faces real-world tests. Success could lead to a shared human-dolphin vocabulary, fostering deeper connections with marine life. Challenges remain, including adapting to diverse dolphin populations and addressing ocean noise. Google’s initiative invites global collaboration, paving the way for breakthroughs in understanding one of Earth’s most communicative creatures.