Cerebras Unveils CS-3 AI Accelerator with Record-Breaking Speed

SUNNYVALE, Calif. — Cerebras Systems launched its CS-3 AI accelerator on March 13, 2024, claiming the title of the world’s fastest AI chip.

The announcement came from the company’s headquarters. It happened during an AI Day event. The CS-3 doubles the speed of its predecessor. Why? To train massive AI models faster than ever.

A Giant Leap in AI Power

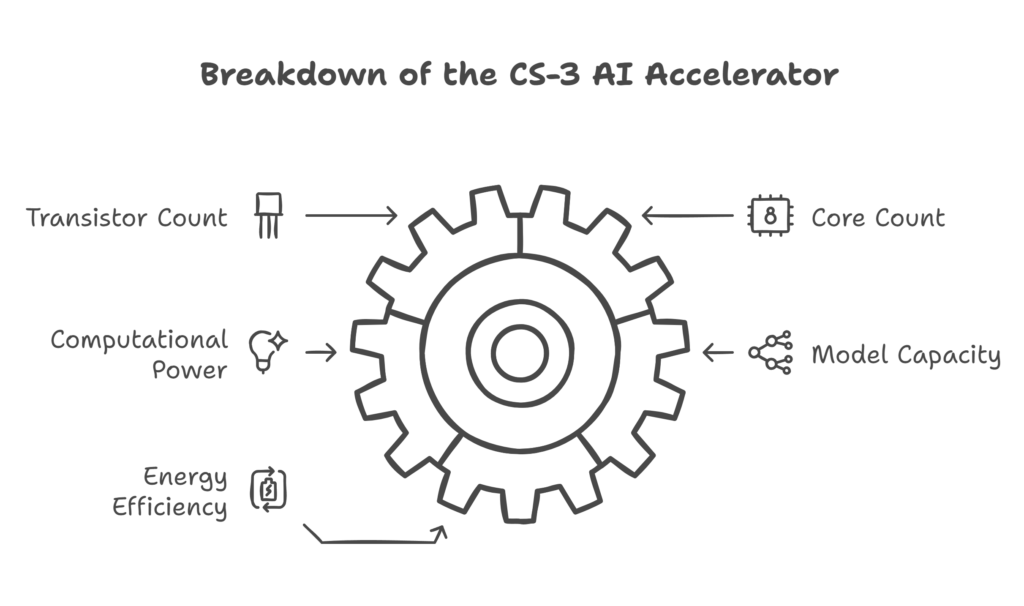

The CS-3 AI accelerator is a big deal. It’s built on a 5-nanometer process by TSMC. That’s tiny but mighty. It packs 4 trillion transistors. That’s 57 times more than the biggest GPU. It also has 900,000 cores. Those churn out 125 petaflops of power. That’s a huge jump from the CS-2. The chip is the size of a dinner plate. It’s called the Wafer Scale Engine-3, or WSE-3.

Cerebras aims to solve a problem. Big AI models take too long to train. Think GPT-4 or Claude. They need tons of computing power. The CS-3 cuts that time way down. It can handle models with 24 trillion parameters. That’s 10 times bigger than GPT-4. Plus, it uses just 23 kilowatts. That’s efficient for its size.

How It Stacks Up

Speed That Shocks

The CS-3 AI accelerator isn’t just big. It’s fast. Very fast. It doubles the performance of the CS-2. That’s a 2x boost in speed. It leaves Nvidia’s H100 in the dust. The H100 maxes out at 3.9 terabytes per second of memory bandwidth. The CS-3? It hits 21 petabytes per second. That’s thousands of times more.

A Team Effort

Cerebras didn’t stop at the chip. They paired it with Qualcomm. Why? To make AI inference cheaper. Inference is when AI uses what it learned. Qualcomm’s Cloud AI 100 Ultra will handle that part. Together, they aim to cut costs by 10 times. “WSE-3 is the fastest AI chip in the world,” said Andrew Feldman, Cerebras CEO. “It’s built for cutting-edge AI work.”

The Backstory of Cerebras

Cerebras started in 2016. It’s a startup with a bold idea. Make chips as big as wafers. Normal chips are small squares. Wafers are whole circles. That’s 300 millimeters wide. The first Wafer Scale Engine came in 2019. It shocked the tech world. Why? No one thought it could work. But it did.

The CS-2 followed in 2022. It trained huge models fast. Companies like GSK used it for drug research. Now, the CS-3 pushes further. It’s part of a bigger plan. Cerebras and G42 are building supercomputers. The Condor Galaxy 3 is next. It’ll use 64 CS-3 systems. That’s 8 exaflops of power. It’s set for Q2 2024.

Why This Matters Now

AI is everywhere. Chatbots, self-driving cars, drug discovery — all need power. GPUs have ruled the game. Nvidia owns 85% of the AI chip market. But Cerebras challenges that. Its CS-3 AI accelerator simplifies things. No need for thousands of GPUs. One CS-3 can do it. That saves time and money.

The timing is key. AI demand is soaring. Companies race to build bigger models. The CS-3 fits that need. It’s not just for tech giants. Enterprises and governments want in. The chip’s scale is unmatched. It could change how AI grows.

What’s Next for the CS-3?

The CS-3 AI accelerator has big potential. It’s shipping to select customers now. Condor Galaxy 3 is the first big test. If it works, more could follow. Cerebras plans nine supercomputers by year-end. That’s ambitious. It could mean tens of exaflops online soon.

But there are hurdles. Cost is one. A full CS-3 cluster isn’t cheap. Competition is another. Nvidia won’t sit still. Neither will AMD or Intel. Still, the CS-3 sets a new bar. It could spark an AI speed race. For now, Cerebras leads. The tech world is watching.