OpenAI Under Broadcom Deal Custom AI Chips via Nvidia Split

SAN FRANCISCO — OpenAI, the maker of ChatGPT, announced a partnership with Broadcom on March 13, 2025 to make custom AI chips in an attempt to reduce its dependence on Nvidia.

The move, which will initially roll out in Silicon Valley, is intended to help OpenAI reduce costs and protect its access to chips for its growing needs.

A New Chip Strategy Unveiled

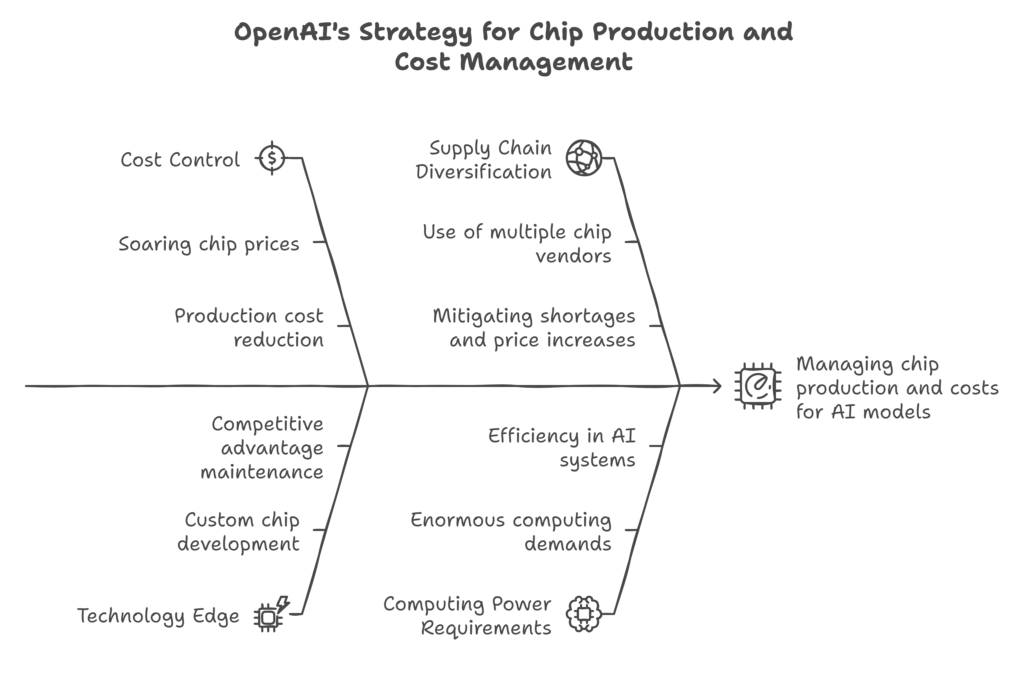

Jumping to Broadcom from OpenAI is a big, big shift. Nvidia’s powerful chips have powered OpenAI’s A.I. models for years. But with chip prices soaring and demand surging, OpenAI wants to be in control. By creating its own chips, it hopes to cut costs and maintain its technology edge. The initial batch is gor scheduled to start production in 2026, with Taiwan Semiconductor Manufacturing Co. (TSMC) doing the build.

The agreement also calls for the use of AMD chips as well as Nvidia’s. This mix insulates OpenAI from shortages and price increases. “We’re diversifying to stay ahead,” an OpenAI spokesperson said. Like ChatGPT, the company’s A.I. systems require enormous computing power. Custom chips could help make that cheaper and faster.

Why Move Away from Nvidia?

Okay, Nvidia controls more than 80% of the AI chip market. Its GPUs — graphics processing units — are the gold standard for training A.I. models. But such power comes at a price. Just one Nvidia H100 chip can cost $30,000, and large AI projects require thousands of them. OpenAI, a Microsoft partner, has relied heavily on Nvidia through the cloud setup of Azure.

Now, costs are biting..OpenAI predicts a $5 billion loss this year, even with $3.7 billion in revenue. Custom chips might narrow that gap. Broadcom has expertise in chip design, customizing them for A.I. tasks like “inference” — when models make predictions. By keeping production on schedule at TSMC, the world’s largest chip maker.

This isn’t just about money. Microsoft and Meta have also struggled with Nvidia’s shortages. OpenAI’s move reflects a trend: Amazon, Google and others are creating chips of their own. Teaming with Broadcom will save OpenAI the need to build factories, a decision OpenAI made to abandon because the costs and timelines were so great.

What’s at Stake for OpenAI?

The stakes are high. OpenAI’s AI systems underpin everything from chatbots to research tools. More users means more computing power, and Nvidia’s supply chain cannot always support it. Custom chips could offer OpenAI flexibility and bargaining power. If they work, they could even surpass Nvidia’s products on some tasks.

Broadcom shares rose 4 percent following the news, reflecting market buzz. AMD stock also rose 3.7% as OpenAI said it plans to use its MI300X chips. There are around 20 people on the company’s chip team, headed by two ex-Google engineers named Thomas Norrie and Richard Ho. They’re targeting inference chips, which analysts say will soon eclipse training chips in demand.

Still, risks linger. Designing chips is a slow and expensive process — up to $500 million for a single design, many experts estimate. OpenAI can’t ditch Nvidia entirely — not yet. It requires Nvidia’s newest Blackwell chips for the most advanced work. So it’s a balancing act: diversify, but don’t burn bridges.

A Potential Game-Changer on the Horizon?

OpenAI may shake up tech with chip push. If it gets this right, others may follow, eating into Nvidia’s dominance. Production begins in 2026, so results are years away. In the meantime, OpenAI will mix AMD and Nvidia chips to make that demand. The company’s $500 billion “Stargate” project with Microsoft suggests even larger ambitions.

For now, it’s a waiting game. Successful would be inexpensive, speedy AI for everybody. Failure could leave OpenAI in a scramble. Either way, this action marks a new era. Chipmakers aren’t just being bought by tech giants — they’re also being made.